This was originally a Facebook post with the sections as comments. I updated it as a Google document, and finally as a blog post to make it more accessible and easier to find and update.

Introduction

The main way to keep up to date with what’s happening on the ground with the Black Lives Matter Protests is via social media (and Twitter in particular).

It’s really confusing though, because there are a lot of people and organisations acting in bad faith and deliberately making communication and fact-checking difficult, and using manipulation strategies to drown out the real information about what’s happening on the ground.

Twitter genuinely does have a lot of really useful citizen journalism and information, but it can also be a pretty terrible place because the owner lets racists, bigots and fake accounts run by authoritarian governments and the far right run free (and does very little to stop it).

A lot of the communication control and miscommunication methods used by the 20th century authoritarian regimes to squash dissent have been updated as new digital strategies. Putin uses them, the Chinese government uses them, the Republican Party and lots of far-right groups use them, and Dominic Cummings made his career and money using them for the Leave campaign.

I’m going to post some links here of common social media manipulation strategies and tricks and how to spot them:

Narratives

Political campaigns talk about “narratives”- how the story is presented, who is presented as a hero or villain, what perspective you are encouraged to see things from. Being in control of the narrative makes you in control of how people see the situation.

When discussing narratives, you talk about “framing” something, like it’s a picture hung on wall showing that view.

Bots and botfarms

A big one: Automated fake accounts (aka bots). They pretend to be real people, but what they post is from a script set by the owner. The guide in the link is really thorough and has lots of photo examples of things to look out for.

https://medium.com/dfrlab/botspot-twelve-ways-to-spot-a-bot-aedc7d9c110c

The Digital Forensic Research Lab who wrote this article have a lot of other good ones about social media and authoritarianism around the world https://medium.com/@DFRLab

Astroturfing

The higher budget version of bots is astroturfing (ie creating fake grassroots support). The comments are written by real people instead of being automated, but it’s still a fake account.

Putin is known to have an in-house department of people doing this. Any time there’s criticism of him in the Guardian for example, the comments are always mysteriously full of people defending him who claim to be from places in the UK yet whose English is either too perfect and formal or is good but has small mistakes typical of Russian speakers.

A recent example of astroturfing happened last week when Twitter and newspaper story comments were suddenly full of people defending Dominic Cummings with the same defences.

“Astroturf also tends to reserve all of its public skepticism for those exposing wrongdoing rather than the wrongdoers. A perfect example would be when people question those who question authority rather than the authority.”

Why bots and astroturfing are used

Setting up bots and paying astroturfers is meant to drown out genuine voices and information with the agenda of the person or organisation paying for them. It’s harder to find the real posts because there are so many fake ones.

They hope that their artificially amplified message will then be accepted as the general public opinion.

The “firehose of falsehood” is a propaganda technique where the same lie is continually repeated in multiple different places to try to give it legitimacy.

https://en.wikipedia.org/wiki/Firehose_of_falsehood

It also takes advantage of the fact that algorithms track what seems popular on their platform and shows “popular” content to users more often. Twitter and instagram have charts on their front page with trending topics. If you can use the Hashtag Flooding strategy via bots and astroturfing on a popular topic, it hides the real information people are posting on that subject. To see it, you have to be following the right people, rather than coming across it on the front page.

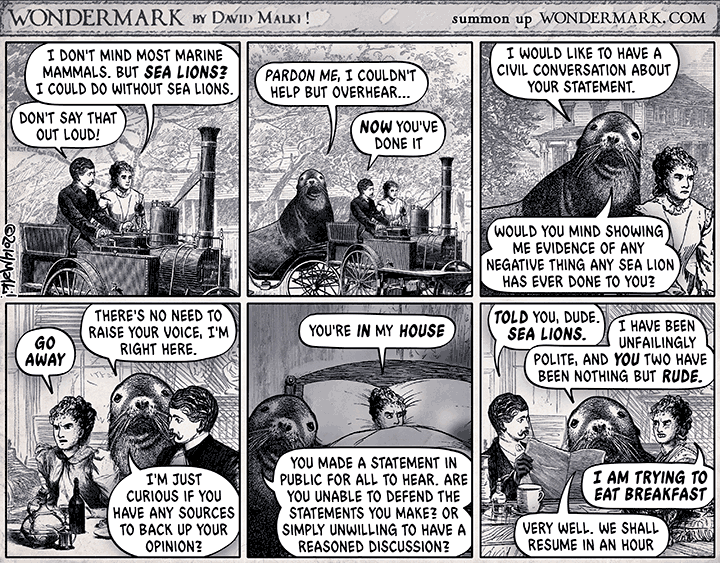

Sealioning

(Named after this comic)

When someone pretends to be politely asking a lot of questions, but actually they don’t care about the answers. They just want to waste everyone’s time and energy and derail the conversation.

The questioner deliberately puts a lot of emphasis on how they are just sooo civil and sophisticated and such a wonderful debater, and how the person they are hassling and wasting the time of is rude and ignorant for not expending lots of energy on talking to them.

A common development of sealioning is to demand “evidence” from someone, but if they do waste their time finding some, it’s somehow never the right sort of evidence and always dismissed. The intention isn’t to actually ask for information, it’s to deliberately waste someone’s time and energy.

Original source of the comic

http://wondermark.com/1k62/

Whataboutery

This is another way to waste people’s time and energy and derail the conversation away from the original point. For example if the original topic was “the police target and brutalise black people” then there will be replies going “what about gangs?” or “what about this other case that is nothing to do with what you’re talking about”.

The aim is to use up so much energy and time addressing these tangents that no one gets to discuss the original topic in detail.

I am Very Reasonable

Another way is to frame anyone who is pointing out injustice or asking for change as unstable, pushy, a bit crazy. The person supporting the status quo frames themself in the narrative as sensible, a grown up, reasonable.

Deliberately misspelling hashtags

They’ll pick a current trending hashtag and deliberately misspell it slightly. Then loads of bots and astroturfers will use it. It will come up in the Twitter trending chart on the front page along with the real tag, but the slightly wrong tag will ONLY have fake accounts in it, artificially inflating in the public eye the agenda of whoever paid for the fake accounts.

So for example a current one might be to write “blacklivesmater” with one t, and then fill it with fake twitter accounts claiming that the protesters did something wrong and deserved to be beaten by the police.

(Yes, I know, weird that the Christian Scientists newspaper has a good article about it- they often surprisingly win Pullitzer prizes too)

Divide and conquer

Also a strategy used in elections. Make people who would otherwise be allies fight, so all their attention is on fighting each other rather than the real issue or enemy.

Fake identities to sow division from within

Does the account bio claim to be a particular marginalised identity, but all the comments are attacking and criticising other people from that group?

For example a common one is that the bio claims to be a trans person, but the account is really unpleasant to other trans users, criticising them that they’re doing everything wrong and harming others in a mysterious never explained way.

Or perhaps they tell people that they’re wrong to speak out about injustice. They’re doing anti-racism or anti-homophobia or anti-misogyny all wrong and hurting people. It’s often not actually made clear what the person is doing wrong either. It’s just this nebulous label of wrongness and guilt.

This is a strategy from hate groups- they pretend to be one of the people they are attacking as cover. They also use this as a method of playing divide and conquer.

How to check out a Twitter account

1) Click on the user’s name

2) Check the account creation date

3) Click on tweets and replies

4) Does this seem like a real person? Do they seem to have a real life? Or do they only post about one (usually political) topic? Nobody has only one topic they ever talk about

5) Scroll back a bit- how do they interact with other people? Do they have real conversations? Do they seem like a real person or do they only argue?

6) Was it created a while ago, but they have no other comments about anything, except to suddenly weigh in aggressively about a big news story? That’s usually a sign it was a spare fake account that has recently been fired up to make up the numbers

7) Has this account dramatically changed identity, tone or topic at some point? Even a different language? Usually a sign of a fake account

8) Number of posts. Does anyone really have time to post 50 times every day about how a certain politician should be re-elected? Does it almost feel like it’s on a schedule? Do they post heavily at a time of day that clashes with the time zone they claim to be in? Genuine heavy Twitter users have conversations with people, and natural ebbs and flows.